What DataSecAI 2025 Revealed About the Future of Data, Security, and AI

When more than 1,000 data, security, AI, privacy, and engineering leaders gathered in Dallas for DataSecAI25, the energy was impossible to miss. From the moment people stepped into the venue, conversations were underway. Practitioners compared real challenges. Security leaders traded stories about experiments with AI that took unexpected turns. Data teams looked for frameworks that might finally bring clarity to sprawling environments.

Beneath the buzz and excitement was a noticeable pattern. Most attendees arrived with the same set of big questions. How do we manage the speed of AI adoption? How do we get clearer on what data we truly have? How do we build governance that supports innovation instead of slowing it down? And how do we prepare for a future where humans are not the only ones interacting with sensitive data?

What stood out most were the themes that surfaced again and again. These insights captured both the urgency and the opportunity that defined the conversations throughout DataSecAI. Here are seven truths that stood out most clearly this year:

1. AI Governance is becoming a growth lever, not an obstacle

For years, governance carried the reputation of slowing organizations down. At DataSecAI, leaders painted a different picture. They described governance as the mechanism that helps their organizations move faster with more confidence. When teams understand what data they have, what is sensitive, and where risk concentrates, they can finally build responsible AI workflows instead of guessing where hazards might hide.

Several speakers described a similar arc. They began by gaining visibility. That visibility uncovered achievable wins. Those wins built trust across teams. And trust opened the door for broader collaboration and eventually automation. When governance works well, it clears a path rather than blocking one.

2. Saying “no” to AI is not a sustainable strategy

Leaders repeatedly acknowledged that AI already exists throughout their organizations whether they sanctioned it or not. Employees experiment with tools out of curiosity or necessity. Teams adopt SaaS solutions without waiting for approvals. Shadow AI grows in the background.

Blocking AI outright only pushes these behaviors into less visible channels. Instead of preventing risk, it hides it. Many leaders said they have shifted toward guiding AI use instead of trying to restrict it. Employees need guardrails, clarity, and context. They need to know what’s safe and what’s not. Demonstrations throughout the conference showed how organizations can allow experimentation while still preventing unsafe actions. This shift from prohibition to partnership marks one of the biggest cultural changes underway.

3. True data visibility is the new baseline for AI security

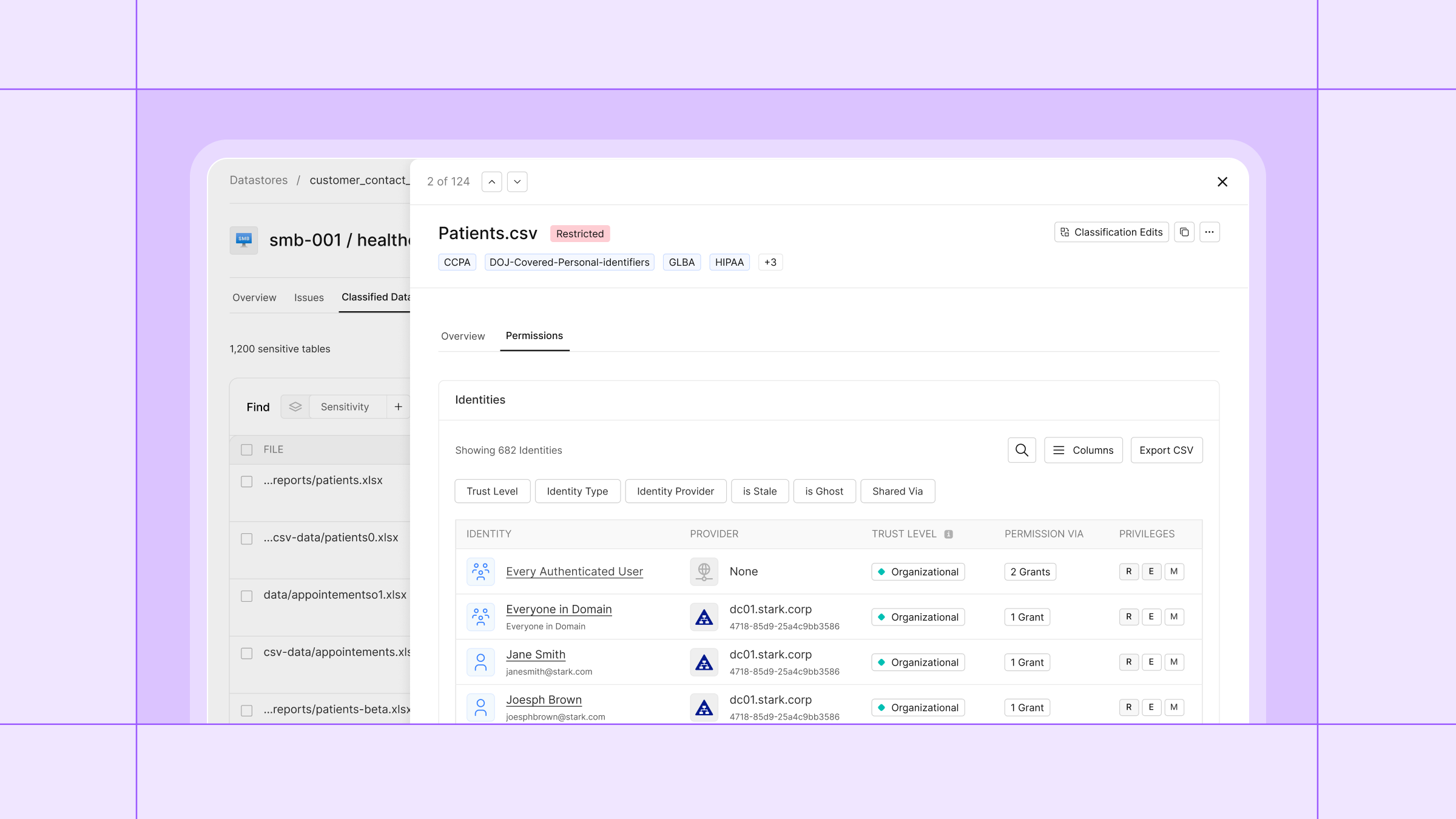

A recurring realization came up across keynotes and breakout sessions: many organizations still struggle to answer basic questions about their data. Leaders admitted they often don’t know how much data they store, where it lives, what is sensitive, or who can access it. Fewer still know who actually accesses it day to day whether human or agent.

In the past, gathering this information required months of manual effort and rarely produced a complete picture. At DataSecAI, teams showed how unified visibility across data, identities, and access is finally becoming realistic. This clarity isn’t just helpful. It’s essential. Without it, AI adoption becomes guesswork. With it, organizations can build a trustworthy, resilient foundation for everything that comes next.

4. Insider risk now looks very different

Some of the most memorable moments came from real-world examples of insider risk. A file labeled “Holiday Gifts” turned out to contain sensitive customer data. A developer copied proprietary code into ChatGPT using a personal account. A departing employee emailed themselves a zip file of credentials one hour after resigning.

None of these incidents stood out because they were dramatic. They stood out because they revealed how easily risk hides in everyday behavior. Insider risk doesn’t usually appear as a single catastrophic event anymore. It emerges from patterns. To detect those patterns, teams need context, not isolated alerts. Leaders emphasized that understanding intent requires correlations across time, systems, and identities. Without that broader view, organizations end up reacting to symptoms instead of addressing the underlying behavior.

5. Human in the loop is important, but it will not scale forever

As AI agents become more common, organizations face a new kind of oversight challenge. Agents request access the way humans do. They perform tasks autonomously. They interact with systems continuously, not intermittently. In one example shared on stage, agents entered a logic loop that drove cloud costs into the millions before anyone intervened.

These stories sparked conversations about the future of oversight. Humans still sit at the center of decision-making, but manual review cannot keep up with the volume and speed of agent activity. Leaders discussed the need for systems that monitor agents in real time, identify anomalous behavior, and enforce guardrails automatically. Oversight must evolve from manual intervention to coordinated, automated supervision.

6. Workforces are starting to manage AI, not just use it

Several speakers described a workplace where employees manage AI capabilities as part of their daily responsibilities. Instead of executing every task themselves, they oversee agents, refine prompts, review outputs, and guide automated workflows. Some companies have even begun reorganizing leadership roles to include responsibilities across data, digital operations, and AI enablement.

This transition requires new skills and deeper collaboration across disciplines. It also demands governance that helps employees navigate AI safely and effectively. Conversations at DataSecAI made it clear that organizations are not simply adopting new tools. They are rethinking how work happens at its core.

7. The role of data and security teams is evolving into orchestration

A closing theme resonated across the event: data, security, and AI teams now serve as orchestrators of intelligence. They no longer simply deploy systems or maintain infrastructure. They guide how data flows, how decisions propagate, and how humans and agents collaborate across the organization.

This shift requires cross-functional cooperation, shared visibility, and a mindset that sees governance as an enabler of responsible progress. Attendees didn’t walk away with all the answers. But they did leave with a clearer sense of direction. The industry is moving toward integrated, adaptive governance that allows innovation to flourish without putting organizations at risk.

DataSecAI ended with a sense of momentum and collective clarity. The challenges ahead of us are real and growing, but the community tackling them is growing, too.

If you weren’t able to attend every session or want to revisit the ideas that shaped the week, all of the keynotes and major talks are now available to watch on demand. You can explore them at your own pace and hear directly from the leaders who helped define the themes of this year’s event.

Watch the DataSecAI keynotes on demand and continue the conversation with the community.

Gain full visibility

with our Data Risk Assessment.