Critical AI Security Risks and How to Prevent Them

While traditional cybersecurity threats are typically handled using existing IT infrastructure, AI poses new risks.

The fact is that AI systems are built differently, handling enormous data pipelines, complex model architectures, rapid iteration cycles, and highly interconnected deployment environments.

This complexity creates problems because traditional security tools weren't built to protect AI workloads. They can't address the unique vulnerabilities that arise from AI system architectures and their extensive data dependencies.

In this guide, we break down the 10 most critical risks posed by AI and why traditional security tools fall short. More importantly, we'll show you exactly what steps you need to take to protect your business from AI-based threats.

Understanding AI Security Risks

Before diving into specific threats, you first need to understand how these systems create fundamentally different security challenges than traditional software.

What Makes AI Security Different

AI systems expand your attack surface far beyond traditional applications.

We’re talking petabytes of structured and unstructured training data used to teach the models. Then there’s each model’s architecture, complete with layers, parameters, and neural weights that can be easily tampered with.

Inference endpoints, like API surfaces, are exposed to users and attackers, and integration points (plugins, retrial systems, third-party APIs, etc.) also face the same vulnerabilities.

In short, these are dynamic systems that continuously evolve, which means that vulnerabilities also shift and change.

Why don’t classic security tools work?

Because:

- SIEMs don’t understand model manipulation patterns

- Firewalls can’t detect adversarial prompts

- EDR doesn’t know how to recognize training data poisoning

- DLP can’t view what sensitive data an AI model has memorized

The AI Security Risk Hierarchy

AI security risks can be organized across four layers:

- #1 Data layer:

- Data poisoning

- Privacy breaches

- Unauthorized access to training data

- #2 Model layer:

- Model theft

- Parameter manipulation

- Adversarial attacks

- #3 Application layer:

- Prompt injection

- Jailbreaking

- Unauthorized functional use

- #4 Infrastructure layer:

- Shadow AI

- Misconfigurations

- Supply chain vulnerabilities

Each of these areas requires unique solutions to counteract them.

Risk #1 - Data Poisoning and Training Data Attacks

What is Data Poisoning?

Data poisoning occurs when attackers manipulate training datasets to influence or corrupt model behavior. This can include inserting corrupt samples, altering labels, or embedding hidden triggers.

AI depends on quality data to learn, but it doesn’t know if the data has been tampered with. It will continue to learn regardless, based on the information it’s given.

For example, back in 2016, Microsoft’s Tay chatbot was quickly deactivated when a coordinated attack led the bot to write racist and inflammatory comments.

Most organizations have zero controls installed for maintaining data integrity. Essentially, anyone could come along and manipulate it with devastating consequences.

Business Impact

Poisoned data leads to diminished model accuracy with unpredictable outputs. These can be dangerous, depending on the type of data the model is using.

More seriously, attackers could plant a secret “back door” that enables them to continuously exploit the model and make it behave in a specific way. For instance, they’ll slip in triggers that teach the model “when this happens, output this harmful or malicious response.”

These back doors are difficult to detect, even with monitoring, and tend to persist across migrations and updates.

Ultimately, these manipulated behaviors lead to compromised decision-making in critical applications.

In high-stakes environments, such as fraud detection, healthcare analysis, or access control, it can lead to financial loss, operational failures, or security breaches.

Prevention Strategies

Reducing the risk of data poisoning requires strong oversight of how data enters and moves through the AI pipeline.

You should, therefore, validate all data sources and track provenance to ensure the dataset’s history is understood and trusted.

Automated scanning will also help identify suspicious anomalies or label inconsistencies.

Performing a data risk assessment adds another layer of protection by evaluating data sensitivity, exposure, and trustworthiness before it reaches the model.

Risk #2 - Model Theft and Intellectual Property Exposure

Understanding Model Extraction Attacks

Model extraction occurs when an attacker copies the AI system by sending constant queries and then analyzing the answers.

They also use techniques like API probing, membership inference, and model inversion to speed up the process.

Eventually, they gain enough information to recreate their own version that behaves almost the same as your original model.

To do this, they never need to access your code or data, just the public interface. This is how entire language models have ended up for sale on the dark web, putting billions of dollars of research and development at risk.

Vulnerability Factors

AI models are most vulnerable when they’re exposed through public APIs without proper restrictions. If anyone can send unlimited queries, it becomes simple for attackers to study the model’s outputs and then reconstruct it.

Weak or nonexistent query monitoring worsens the situation because unusual activity gets lost in normal traffic.

And if you don’t take care to watermark your AI model, it’s almost impossible to verify that you are the owner if a stolen copy turns up online.

Shadow AI deployments (models launched outside official security controls) are another concern because they create additional blind spots where theft goes unnoticed.

Protection Strategies

Protecting against model theft starts with tightening access controls. Therefore, you should strengthen API security controls and put rate limits in place. This will prevent attackers from sending endless queries.

Perform query pattern analysis and anomaly detection. This flags suspicious usage before damage is done. Also, add watermarks to your model, so it can be verified if duplicates arrive on the market.

Furthermore, you should apply consistent AI data security practices across all deployments. Doing so will protect every model, including those that might otherwise escape formal oversight.

Risk #3 - Prompt Injection and Jailbreaking

Anatomy of Prompt Injection

Prompt injection is a technique where someone deliberately creates inputs that trick an AI system into disregarding its own rules or safety controls.

Types of attacks can be:

- Direct: Where the attacker explicitly tells the model to bypass its restrictions.

- Indirect: Where poisoned data or multi-turn conversations guide the model toward unsafe behavior.

A well-known example is ChatGPT DAN (do anything now), a jailbroken version of ChatGPT that bypasses its content moderation safeguards.

Enterprise Risk Exposure

For businesses, unauthorized prompt injection can manipulate chatbots to reveal confidential or sensitive information that should otherwise be kept secure.

Bypassing safety guardrails can lead to biased or damaging outputs that reduce user trust.

In more serious instances, attackers have used this technique to harvest credentials and launch social engineering attacks.

Recent examples include the successful jailbreaking of Mistral and xAI models, which cybercriminals then used to create phishing emails, generate hacking tutorials, and produce malicious code that would normally be blocked.

Ultimately, malicious responses create a serious reputational risk, especially for customer-facing AI systems. If they start producing off-brand content or responses that violate your company standards, users will lose confidence in the technology and may stop using it entirely.

Defense Mechanisms

Your defense against this type of attack begins with strengthening how inputs and outputs are handled. So, use input validation and sanitization to help block harmful instructions before they influence the model.

Similarly, output filtering and content moderation prevent unsafe responses from ever reaching the end user.

Use strong, security-focused prompt engineering to limit an attacker's ability to steer the AI along the wrong course.

Runtime monitoring and detection tools (part of what is AI-SPM) will also provide ongoing protection and identify any manipulation attempts in real time.

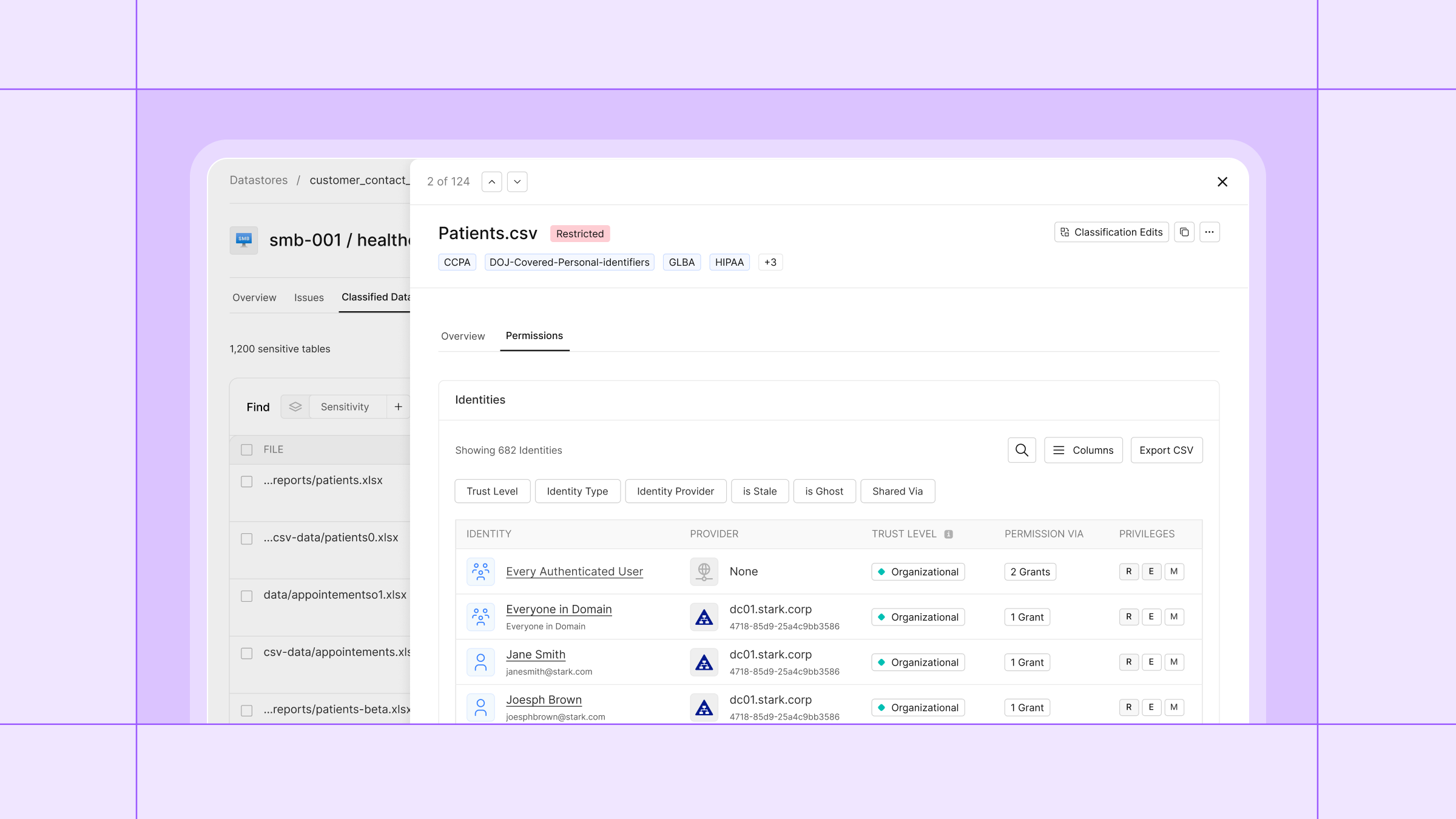

Risk #4 - Sensitive Data Exposure and Privacy Violations

AI's Data Privacy Paradox

AI brings up ethical concerns, largely due to the huge amounts of information it relies on. This data feed often conflicts with strict privacy regulations due to the model’s ability to “memorize” sensitive details.

The key risk is private data leakage that affects both individual personal information and proprietary business data.

Samsung is one notable example. Its employees accidentally shared trade secrets with ChatGPT by:

- Pasting confidential source code into the model to check for errors

- Inputting a confidential meeting transcript to convert it into notes

- Sharing code and requesting ChatGPT to optimize it

All of this information is now out there for anyone to access, just by asking ChatGPT.

Incidents like these expose organizations to loss of proprietary data, but also risk them falling foul of penalties if they breach GDPR, CCPA, and other industry-specific compliance rules.

Privacy Risk Categories

Several types of privacy threats arise when sensitive information is shared in AI workflows:

- Training data exposure may reveal PII (personally identifiable information), PHI (protected health information), financial data, and even trade secrets.

- Membership inference attacks can reveal whether someone’s data was included in the training dataset.

- When information crosses borders without proper controls, it creates a data residency violation.

- Third-party AI service data sharing can create additional exposure.

Privacy-First Security

To counteract this risk, you must take a privacy-first approach in how you train your AI models. This includes minimizing the amount of data you feed it and using anonymization to protect sensitive details.

Use differential privacy implementation to help prevent models from memorizing specific details. And using secure enclaves for sensitive workloads will prevent data from entering the wild.

Lastly, implement automated protection policies, supported by strong governance frameworks and compliance readiness. This will ensure your data usage aligns with regulatory requirements across the board.

Risk #5 - Adversarial Attacks and Model Manipulation

Understanding Adversarial AI

Adversarial attacks involve inputs that are crafted specifically to make the AI model produce incorrect or misleading results.

They’re often hard to catch because the results can be so subtle that humans don’t notice them.

Common methods include:

- Data poisoning: When attackers manipulate training datasets to influence or corrupt model behavior.

- Evasion: Where inputs are slightly altered so the AI misclassifies them.

- Model inversion: Where outputs are analyzed to reconstruct sensitive information from its training data.

For example, adversarial patches, containing small patterns, have been placed on road signs, causing autonomous vehicle systems to misread or ignore them entirely.

Impact Across Industries

The consequences of adversarial attacks can be severe.

In our example above, which affects autonomous vehicles, the adversarial patches can cause collisions and the potential loss of life.

Other impacted areas include facial recognition software, which can be tricked into identifying someone as a completely different person (identity spoofing).

Fraud detection models may allow suspicious transactions to pass, and malware detection could overlook harmful files.

Adversarial Robustness Strategies

To prevent these issues, your AI models must become resilient to such attacks:

- Training on adversarial tactics exposes the model to harmful examples, allowing it to learn and recognize them.

- Input transformations, like noise reduction and normalization, help filter out tampered data.

- Ensemble models work to provide backup decision-making when one model is compromised.

- Continuous red teaming also tests the system against evolving attack methods, while runtime anomaly detection monitors live inputs for signs of manipulation.

Risk #6 - Shadow AI and Ungoverned Deployments

The Shadow AI Crisis

Shadow AI involves the unauthorized use of AI tools without any approval or security oversight.

For instance, when employees adopt unsanctioned LLM APIs or SaaS services, or create rogue internal chatbots of their own.

Shockingly, 89% of organizations have zero AI service visibility into what’s being used within the company.

Every unapproved tool becomes a potential attack vector because it operates outside the established security and monitoring controls.

Business and Compliance Risks

The most significant risk of shadow AI is exposure of sensitive data, which may be uploaded to unvetted and non-secure tools.

Without audit trails, there’s no proof of what was shared, who accessed it, and how it was stored. These compliance violations have, of course, serious compliance concerns.

Also, these tools can leak intellectual property, while the vendors themselves may have unknown or weak security practices.

Discovery and Control

To prevent shadow AI, it’s necessary to complete an inventory of what’s already in use. This should be an ongoing process that allows the continuous discovery of unauthorized tools.

Network traffic monitoring also helps, since it can pick up AI service traffic and identify which tools are being accessed.

You should also adopt a strong policy enforcement process and make it clear which AI services are approved for use.

This strict governance, supported by secure AI adoption practices, will ensure that all AI tools are introduced responsibly and operate within defined security boundaries.

Risk #7 - AI Supply Chain Vulnerabilities

The AI Dependency Web

AI models don’t sit within a closed box. They spread across a tangled web of integrated third-party models, resulting in a complex supply chain.

For instance, open source models, or models with pre-trained foundations and fine-tuned variants, are third-party components that organizations frequently rely on.

Training often requires public datasets, content scraping, and even synthetic data generators. And then you have the cloud-based infrastructure, GPU providers, and MLOps platforms.

Each dependency is another risk you have to consider.

Supply Chain Attack Vectors

Attackers often target the AI supply chain because it offers opportunities to insert malicious components before it reaches the end user:

- Pre-trained models can be tampered with or have a hidden backdoor inserted before an organization implements them.

- Malicious packages could be uploaded to AI or ML repositories (complete with a trustworthy-sounding name).

- Data libraries and frameworks could introduce vulnerable dependencies.

- Third-party organizational breaches can impact every customer who relies on their services.

Supply Chain Security

To reduce supply chain risk, you need to implement an AI-BOM (bill of materials). This maps out each dataset, model, and dependency, making it easier to track what enters the environment.

Ongoing vendor security assessment and monitoring ensure you’re only using those that maintain strong protections.

Additionally, carrying out model provenance confirms that downloaded or inherited models haven’t been tampered with.

These protocols establish a secure AI development lifecycle. And when combined with dependency scanning, vulnerabilities are caught early, maintaining supply chain integrity.

Risk #8 - Insecure AI Model Deployment and Configuration

Common Deployment Vulnerabilities

It’s not just the AI model itself that can cause vulnerabilities. The deployment of a model can also increase risk.

Exposed model endpoints without authentication enable anyone to interact with the system, while default credentials and poorly configured settings make unauthorized access easier.

Misonfigured cloud storage containing training data is another issue. These can leave said datasets openly accessible, plus inference APIs also sometimes end up publicly available.

For instance, a dark web forum revealed that an individual had claimed unauthorized access to AWS S3 buckets belonging to a company worth $25 million in revenue.

Configuration Risk Factors

A lot of deployment issues can be traced back to a lack of infrastructure-as-code practices. Without these, environments must be configured manually, leading to an increase in human error.

And when AI workloads aren’t segmented from the rest of the network, attackers can quickly gain access to everything.

Limited logging and monitoring make it tougher to spot unusual activity, and overly permissive access controls widen the attack surface.

Container and Kubernetes misconfigurations are also a common cause for concern, particularly within MLOps environments.

Secure Deployment Practices

Deployment requires a disciplined approach. It starts with hardening the underlying infrastructure and enforcing security baselines to remove common vulnerabilities.

Then, you should adopt a zero-trust architecture, so every request is verified rather than assumed safe.

Automated configuration scanning adds another layer of protection, catching issues before they escalate. And you should perform regular audits and penetration tests for ongoing assurance.

Risk #9 - AI Model Hallucinations and Misinformation

Understanding AI Hallucinations

There is no shortage of news articles about AI giving out misinformation or dreaming up completely fabricated “facts.”

These hallucinations appear credible because the model never signals that it’s uncertain.

Google’s Bard is a famous example, which told the world during a public promotional demo that the James Webb Space Telescope captured the first-ever image of an exoplanet. An entirely untrue piece of information.

In another instance, ChatGPT submitted a case brief containing several non-existent judicial decisions that the model had completely fabricated.

SU Sleuth has demonstrated how AI could be involved in generating falsehoods within YouTube’s content ecosystem, illustrating how misinformation becomes entrenched and amplified if left unchecked.

Security and Business Impact

The key risk here is that your organization could make decisions based on incorrect information generated by the AI model.

Relying on faulty outputs without fact-checking can not only disrupt operations but it can also mislead customers and pose serious legal liability. Public-facing systems also risk eroding reputation and trust when outputs are unreliable.

Ultimately, if your AI models are hallucinating, it will only result in financial loss.

Mitigation Strategies

Reducing misinformation revolves around grounding the model’s outputs in verified sources. Retrieval-augmented generation (RAG) helps ensure that answers are based on real, solid data, not guesswork.

Human verification and review should be used to add oversight, especially when it involves critical or sensitive decisions.

Use confidence scoring and uncertainty quantification alongside output validation against ground truth. Comparing outputs with known facts helps the AI learn what the truth is.

Finally, use clear disclaimers to remind users about the limitations of AI and that responses are not guaranteed to be fully accurate.

Risk #10 - AI System Availability and Denial of Service

AI-Specific Availability Threats

AI is extremely resource-intensive, which heightens the risk known as “denial of service.”

Essentially, attackers can overload models with intentionally complex or extensive queries, draining the model’s resources and rendering it unusable for others. Large-scale inference DDoS attacks can also entirely shut down public-facing AI services.

Training pipelines are also vulnerable to disruption and can lead to GPU hijacking for unauthorized workloads.

Sponge attacks are one example of how attackers reduce AI system availability (particularly for software that runs in real time). These work to overload LLM inference with hugely expensive prompts, forcing the AI model to consume more power. The result is a sluggish system and overwhelmed applications.

Business Continuity Impact

When AI systems become available or are too slow to use efficiently, this has a knock-on effect for AI-dependent operations.

Workflows that rely on automated decision-making or real-time responses may halt completely, creating delays and financial losses from downtime.

As a result, service level agreements are quickly breached, and the customers quite rightly express their dissatisfaction.

It also places you at a competitive disadvantage. If your rival organizations are running full service, it doesn’t take long before your clients will jump ship.

Additionally, in environments where multiple systems depend on one model’s output, a single failure can cascade into broader operational issues. The longer the outage, the harder it becomes to recover both performance and trust.

Availability Protection

AI system availability can be maintained by limiting how much strain attackers can place on the system at any one time.

Throttling queries and limiting rates prevent abuse, while resource quotas and cost controls keep workloads from exhausting the AI’s capacity limits.

You can also ensure functionality during performance dips by implementing distributed inference infrastructure and fallback mechanisms.

For an additional line of defense (particularly against large-scale disruption), use dedicated DDoS protections that have been specifically adapted for AI endpoints.

Getting Started with AI Security Risk Management

Before you start deploying security frameworks, you need to know what AI systems you currently have and how they’re being used.

You must also evaluate your existing security protocols to find out where the gaps lie. This will help you understand where to focus your efforts first.

Immediate Actions

With the above in mind, here are the actions you need to perform:

- Conduct AI asset discovery: Identify all models, datasets, APIs, and AI shadow tools.

- Assess security posture and gaps: Perform a full audit of existing security controls to pinpoint gaps in data protection, access control, and monitoring.

- Identify high-risk deployments: Flag which models are high-risk, especially if they’re public-facing or handle sensitive data.

- Implement basic controls: Start with the core safeguards, such as data authentication, rate limiting, data governance, basic logging, and so on.

Once foundational controls are in place, you can move on to the next phase: building a mature and scalable AI security program.

For more information on AI data security, get in touch with Cyera and discover how its platform can protect your organization.

Gain full visibility

with our Data Risk Assessment.